Darius Taylor, WestEd and University of Massachusetts, Amherst

As a 29-year-old black man who grew up in a low socio-economic status community in the Chicago suburbs it is tough to explain to my family and friends what a psychometrician actually does. In the end, I end up saying something a long the lines of “I work in educational and psychological testing”. Their immediate responses are: “OH, so you’re gonna make testing better?!” I pause before I decide to explain that for the past three years in my measurement coursework, I’ve been taught that one must try hard to make a biased assessment given the many steps involved in test construction. So, I simply ask, “what does ‘make testing better’ mean for you?” As, I listen to their typically long-winded response, I realize how jaded my people are in respect to testing. These informal interviews typically have similar themes which may be rooted in the lack of pluralistic value in the psychometric community.

My transition to the field of education from a background in epidemiology and social indicators of health has influenced the discovery of my niche in educational measurement. This population health mindset coupled with my life experiences (and those of others who look like me) has landed me in the realm of social justice to an inquiry focused on where social justice spaces live and thrive in the realm of educational measurement. It didn’t take long for me to pick up on buzz words like DIF and fairness. What has left the greatest imprint on my bleeding heart are the validity theories of Samuel Messick that encourages measurement researchers to consider the social consequences of testing.

Messick’s 1989 chapter on Validity was first introduced to me in my Spring 2018 course Advanced Validity and Test Validation taught by our NCME President, Dr. Steve Sireci. Messick’s feedback loop on test validation encouraged my NCME Graduate Student proposal submission and my recently passed comprehensive paper defense which Dr. Sireci chaired.

Evaluating Social Consequences in Testing: The Disconnect Between Theory and Practice

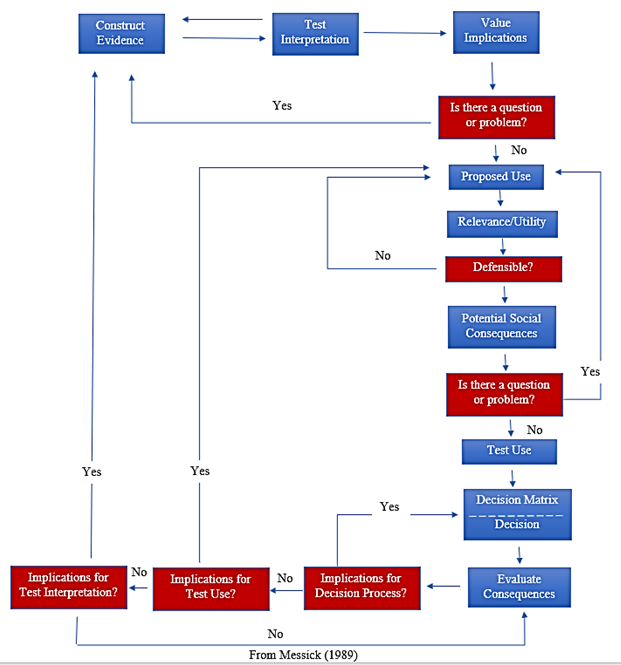

To remain unbiased in our assessment practices, we must continue to evaluate the social impacts of testing and the values laden within test score interpretation. But how do we evaluate these values and their impact? Messick (1989) presented a feedback loop (see Figure 1) for test validation that urges developers to consider social implications at multiple steps in the process. He presents a dynamic and interactive process for validating the use of a test for a particular purpose. It starts with an iterative process of construct validity evidence, which leads to efficient score interpretation. If score interpretation aligns with values (and assumptions) associated with the construct, then the score (and test) can be considered for a proposed use. This is step one of the feedback loops. Once a test is considered for a proposed use, a similar feedback loop is employed to assess whether test scores are defensible with respect to relevance and utility for an applied purpose. This is the second step in the feedback loop. However, if relevance and utility are not defensible then the proposed use of the test or methods of relevance and utility inquiry are re-assessed. If these effects are confirmed, social consequences are evaluated. This is the third feedback loop. This piece investigates the implications of the test (or testing system) being used for a particular purpose in society. Are there differential attitudes, knowledge and behaviors across subgroups in society as a result of this test being used for a particular purpose? If no negative potential social consequences exist, it is safe to assume the test can be used for a particular purpose.

Figure 1. Messick’s (1989) Test Validation Feedback Loop

Although Messick’s unified validity approach is well recognized and respected (e.g. Lane, Parke, & Stone 1998; Poplum 1997; Shephard 1993), the extent to which social implications and intrinsic values are actually considered during prior and current test validation efforts is unclear. The discovery of negative social consequences should require researchers to loop back to understanding the proposed use and defensibility with respect to relevance and utility. However, as Engelhard (2014) and Haertel (2012) pointed out, many testing programs do not even consider social consequences of testing in their test validation efforts.

I agree with Messick (1980; 1989), as well as others (e.g., Engelhard, 2014; Haertel, 2012; Lane, 2014; Lane & Stone, 1998; Lane, Parke, & Stone, 2002; Poggio, Ramler, & Lyons, 2018), that it is critically important to study the consequences of testing because it helps test developers and users evaluate whether test score interpretations have deviated from their intended uses. Such an investigation leads to an understanding of the societal values placed on specific tests, which may also explain biases associated with the use of certain constructs, scores and score interpretation. As Messick (1989) described, “Values are important to [consider] in score interpretation not only because they can directly bias score-based inferences and action, but because they could also indirectly influence in more subtle and insidious ways the meanings and implications attributed to test scores” (p.59).

Unfortunately, social consequences are often overlooked or diluted within the educational testing community. Cizek, Bowen, and Church (2010) published a systematic review of the literature to evaluate the prevalence of research studies focused on consequential validity. The authors did not discover any articles focused on “consequential validity” amongst the 1,007 articles scanned from the years of 1999-2008. As a result, they concluded (2010) that the fields of educational and psychological measurement should reimagine the use of validity evidence based on the consequences of test score use as an integral part of the validity conversation and instead create a space for consequence of test score use apart from validity theory.

Contrary to the recommendations of Cizek et al. I believe that validity evidence based on the consequences of testing is an important part of validating a test and its use for a particular purpose. It belongs in the validity conversation. To reject the use of consequential evidence for building a validity argument (in addition to sources from the other four pieces of validity evidence) is a disservice to those who have the largest stake in the manner, examinees.

After my comprehensive literature search, I found only 16 studies since the shift in validity theory in 1999 that actually addressed social consequences. Shephard (1997) stated, “most measurement specialists acknowledge that issues of social justice and testing effects are useful ideas, but some dispute whether such issues should be addressed as part of test validity. They worry that addressing consequences will overburden the concept of validity or overwork test makers.” (p. 5) Her claim is congruent with Messick (1989) who stated that “the terminal value of the test in relation to the social ends to be served goes beyond the test maker to include the decision maker, the policy maker, and the test user” (p. 89). Thus, this is a community responsibility, but it does not mean test developers should have the freedom to wash their hands and be done once a test is assumed to be used for a purported purpose in society.

The results of my study confirm previous authors conclusions that testing consequences historically are not of the greatest concern to the assessment community. It is time the assessment community takes a true position on the social consequences of testing. There have been years of teaching and preaching on its use, misuse, and importance to overall validity. We believe that there should be a place for social justice approaches to assessment and its theorization in modern testing standards and operations.

References

Cizek, G.J., Bowen, D., Church, K. (2010). Sources of validity evidence for educational and psychological tests: A follow-up study. Educational and Psychological Measurement, 70(5), 732-743.

Engelhard, G., & Wind, S. A. (2013). Educational testing and schooling: Unanticipated consequences of purposive social action. Measurement, 11(1/2), 30–35.

Haertel, E. (2013). How is testing supposed to improve schooling? Measurement: Interdisciplinary Research and Perspectives, 11, 1–18.

Lane, S. (2014). Validity evidence based on testing consequences. Psicothema. doi: 10.7334/psicothema2013.258.

Lane, S., Parke, C. S., & Stone, C. A. (1998). A framework for evaluating the consequences of assessment programs. Educational Measurement: Issues and Practice, 17(2), 24-28.

Lane, S., & Stone, C. A. (2002). Strategies for examining the consequences of assessment and accountability programs. Educational Measurement: Issues and Practice, 21(1), 23-41.

Messick, S. (1980). Test validity and the ethics of assessment. American Psychologist, 35, 1012-1027.

Messick, S. (1989b). Validity. In R. Linn (Ed.), Educational measurement, (3rd ed., pp. 13-100). Washington, D.C.: American Council on Education.

Poggio, J, Ramler, P., & Lyons, S. (2018). Consequential validation: Where are we after 25 years? Presented at the National Conference for Measurement in Education, New York, NY, April 16, 2018.

Shepard, L. A. (1993). Evaluating test validity. Review of Research in Education, 19, 405-450.

Shepard, L. A. (1997). The centrality of test use and consequences for test validity. Educational Measurement: Issues and Practice, 16 (2), 5-24.